This project seeks to understand the effects of guidance scale on classifier-free sampling of conditional diffusion models. We trained a conditioned UNET diffusion model on the MNIST dataset and sampled using classifier-free guidance. Classifier-free guidance is essential for modern image generation diffusion models, allowing label-dependent features become more prominant simply by changing a variable, the guidance scale.

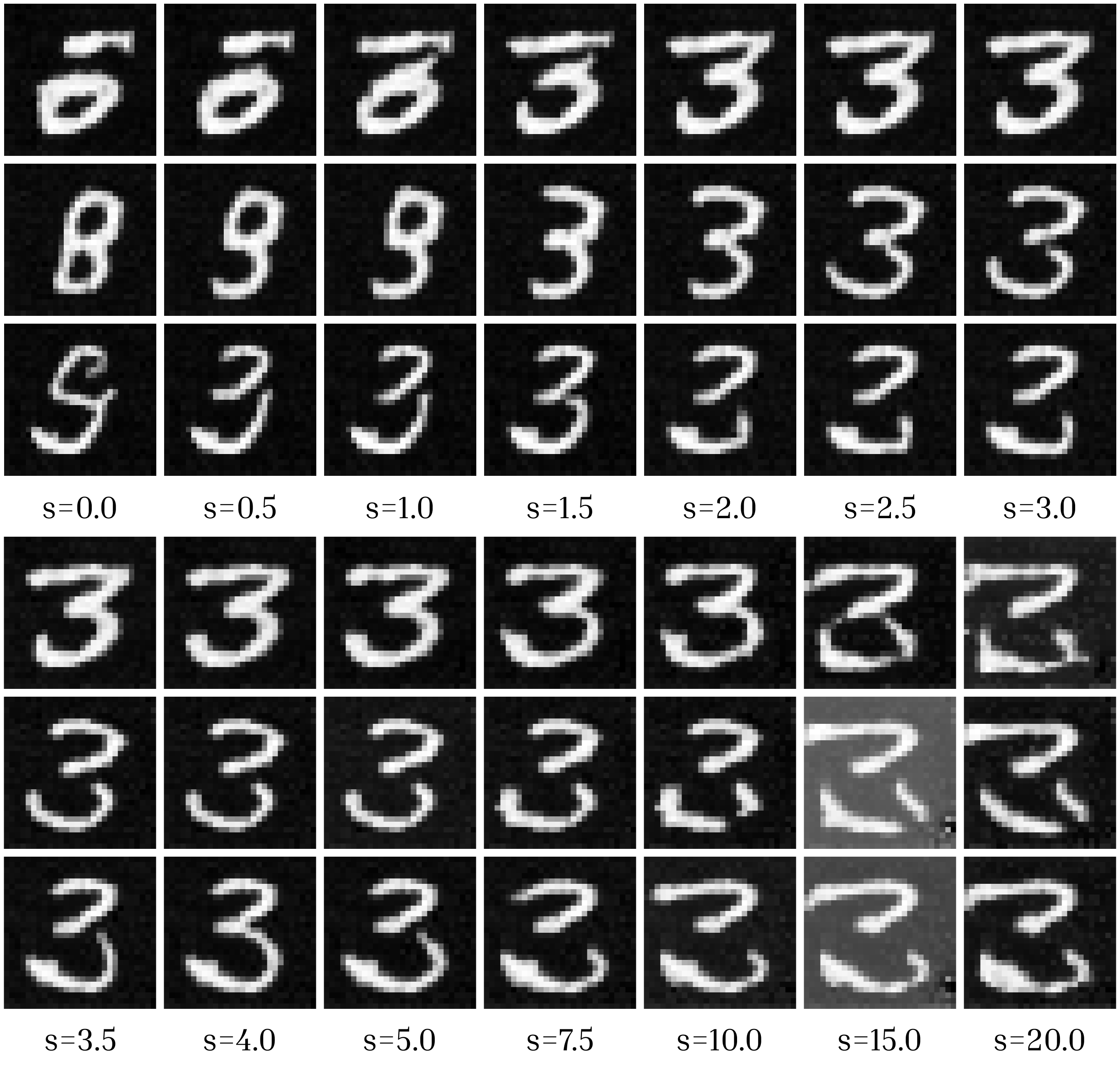

Choosing a correct guidance scale is critical during sampling. Low guidance scales result in cross-class artifacts and inadequate label representation, high guidance scales result in extreme pixel values and a lack of diversity; the optimal guidance scale lies between these extremes, balancing the tradeoff between diversity and label faithfulness.

This project empirically studies the effects of guidance scale on the generated samples. We trained a classifier to predict the label given the generated sample and used the accuracy of the classifier as a proxy for sample correctness.

Read the full writeup here.

Github repo here.